Analyzing QUIC traffic for dynamic content delivery

A CDN utilizes a wide range of optimizations in order to deliver content efficiently, regardless of network conditions, user location, or end-user device. In this blog post, we will share our preliminary research into optimizing the delivery of dynamic content using the modern QUIC protocol.

Generally, content can be divided into two basic groups:

- Static content – Content that does not frequently change. For example, an image, an HTML file, or a VOD (Video-on-demand).

- Dynamic content – Content that changes or is generated in near real-time and needs to be delivered with as little delay as possible. For example, a video livestream.

In general, delivering dynamic content is much more demanding and complex; we already dove into this in the previous blog post.

To deliver static or dynamic content, one can use different protocols. Caching dynamic content is often more complex because only a few transport protocols are easily cacheable without understanding their content (for example, RTMP, RTC, WebRTC, and RTP are not easily cacheable). Other protocols, such as HLS, DASH, or CMAF, allow caching more easily with the downside of potentially delivering stale content [1].

Delivering dynamic content is latency-sensitive, and even low packet loss can lead to video buffering and user experience degradation. One factor that can significantly impact a CDN's performance is the choice of a congestion control algorithm (CCA) and its configuration parameters. Inadequate selection or misconfiguration of a CCA can lead to subpar performance when delivering dynamic content; for example, combining BBRv1 with Cubic simultaneously can lead to an unfair share of bandwidth.

QUIC protocol, which is a relatively new connection-oriented transport protocol that was first implemented and deployed by Google in 2012, is standardized by the IETF as RFC 9000 and managed by the QUIC working group [2]. In addition, like TCP, QUIC works on layer four of the OSI model. The main difference between TCP and QUIC is that QUIC is built on top of UDP, does not require a three-way handshake, provides connection multiplexing, and requires TLS 1.3 encryption, just to name the most important ones. Also, as of now, QUIC is not implemented in mainstream kernels (Windows, Linux), and its implementation is being provided by third-party libraries written in user space.

Since QUIC is a drop-in replacement for TCP, similar problems need to be addressed for congestion and flow control.

Choosing an adequate CCA with correct tuning depends on the large variety of conditions in which a CDN has to operate, such as network characteristics, device configuration, topology configuration, and scale of CDN, to name a few.

Our experiments

We run our experiments on QUIC in every Point of Presence (PoP) in the CDN to show broad insights into dynamic content delivery and compare network performance across the world. We chose to present four representative PoPs, each with different network characteristics. The PoPs were selected from the regions of North America, Europe, the Middle East, and Asia.

We use separate physical servers and distinct caching applications for static and dynamic content within each PoP, which ensures that the data we collect during testing comes exclusively from dynamic content. We use a custom in-house caching application (CA) to handle dynamic content. The CA uses the Google Quiche library [3]. The library is RFC-compliant and the current leading edge for QUIC implementation.

The Google Quiche library already exposes metrics for each connection [4]. We wire those metrics into the CA, allowing us to collect the metrics for each connection. We collect and aggregate the metrics every 5 seconds when the connection is active and at the end of the connection.

QUIC version analysis

The Quiche library supports multiple QUIC versions [3], with the version determined during the initial connection negotiation between the client and server. Since the standardization of QUIC in RFC 9000 [5], there has been a shift toward the RFC version, which now dominates in the CDN's deployment. However, some CDN clients still use draft versions of QUIC, and some clients continue to rely on gQUIC, which is Google's experimental version prior to standardization.

QUIC stream analysis

A single QUIC connection can support multiple multiplexed streams [5], but our observations show that only a small number of clients take advantage of this feature. In fact, over 80% of connected clients establish new connections rather than reusing an existing one with multiplexing. Our hypothesis is that the limited use of stream multiplexing is due to the lack of support in video players.

Congestion control algorithms

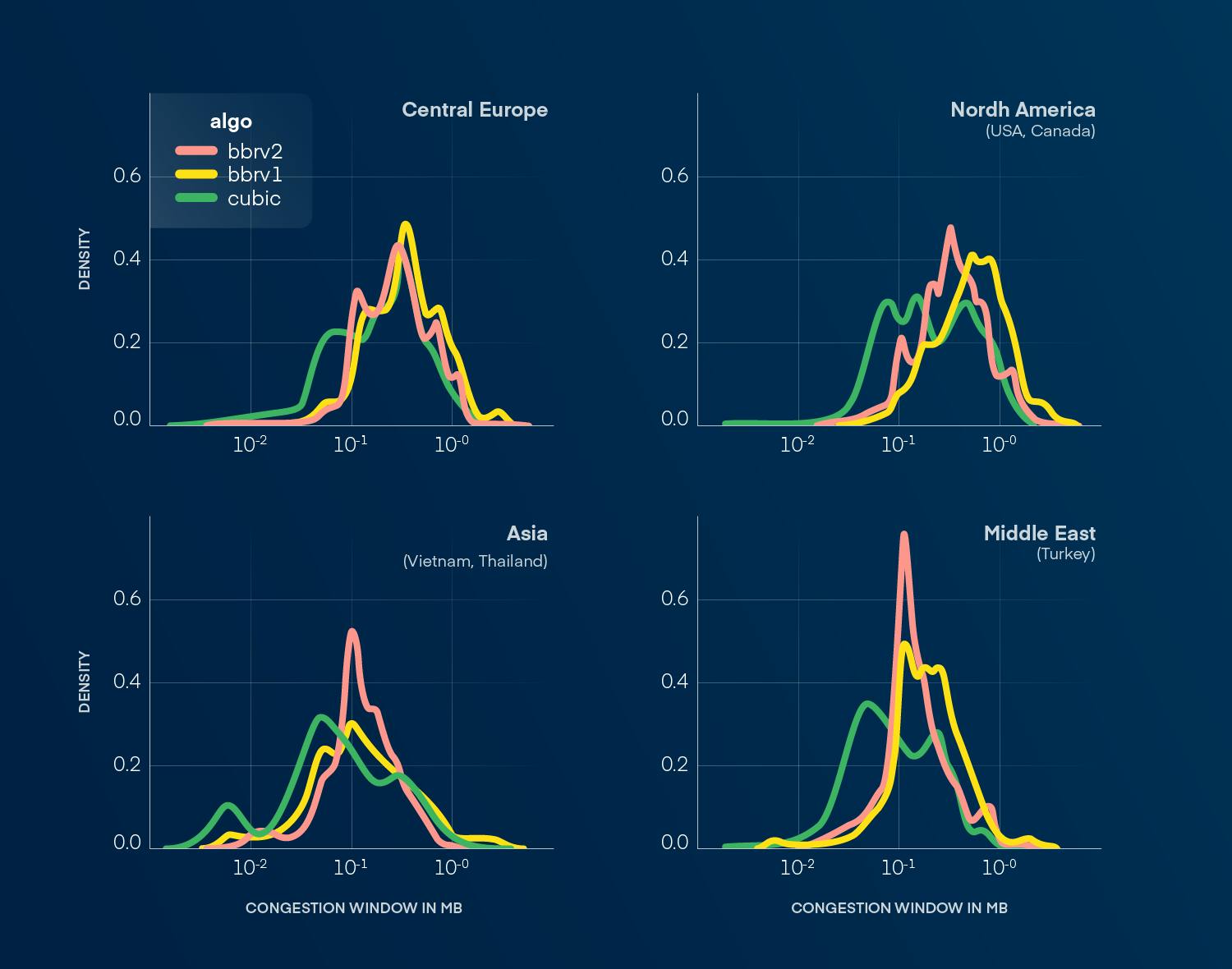

In our experiments, we analyze and compare CCA characteristics in the aforementioned 4 PoPs. Furthermore, the selected PoPs were chosen so that they have comparable network traffic and hardware configuration. The collected data is an aggregation of data collected from Q4 2024 to Q1 2025. The selection of CCAs is limited to algorithms that are implemented in the Google Quiche library. For that, we select Cubic, BBRv1, and BBRv2.

| Algorithms | Cubic | BBRv1 | BBRv2 |

| Model parameters | N/A | Throughput, RTT | Throughput, RTT, max inflight packets |

| On packet loss | Reduce the CWND by a constant factor | N/A | Explicit loss rate target |

| Connection startup | Slow-start | Slow-start | Slow-start |

Unfortunately, we are unable to include the NewRENO algorithm, defined in RFC 9002 [6], due to its poor performance negatively affecting clients and their playback of dynamic content.

All CCAs are set to default settings in each observed PoP.

The BBRv1 and BBRv2 were explored in [7]. However, the publication focuses solely on TCP. Since QUIC operates on top of UDP, the BBR algorithms had to be reimplemented from scratch in user-space libraries. This reimplementation can lead to a behavior that differs from what is observed in TCP since QUIC has distinct characteristics.

When we talk about QUIC, we mean some version of HTTP that uses QUIC as a transport protocol. HTTP/3 is a standardized protocol built on top of IETF QUIC with a combination of QPACK for header compression [8]. However, some of the CDN's clients use HTTP/1.1 or even HTTP/2 over QUIC.

Congestion window size

In the following figure, we can see a KDE plot visualizing the performance of three different CCAs for dynamic content. From that, we can conclude that the Cubic generally shows the poorest performance of all three CCAs in all regions. We can see that both BBRs are able to keep a bigger CWND compared to Cubic, but there are differences in some regions. BBRv1 outperforms BBRv2 in Europe, North America, and the Middle East, even though BBRv2 should be superior to the previous version.

CPU utilization

Both BBRv1 and BBRv2 are much more computationally complex than Cubic. Cubic is de facto event-based, where each dropped packet manipulates the congestion window (CWND) by a scaling constant, meaning the algorithm has minimal bookkeeping. On the other hand, both BBRv1 and BBRv2 maintain a state machine, which is much more computationally expensive.

BBRv2 maintains more parameters than BBRv1, which adds to the computational complexity.

Nevertheless, we have not seen any measurable difference in CPU usage between Cubic, BBRv1, and BBRv2. We can conclude that the overhead of BBRv1 and BBRv2 is negligible.

Summary

Our preliminary results show that QUIC is steadily increasing its share of overall internet traffic; however, new emerging protocols also have their trade-offs that need to be tackled in order to utilize them effectively, for example, the lack of support from kernels and many implementations, where some implementations implement only a subset of all QUIC features. In addition, our analysis shows that the default settings and even the selection of the CCA are not all-powerful because if one setting works in one region, it does not imply equal performance in another region.

The key conclusion is that BBRv1 outperforms BBRv2 in Europe, North America, and the Middle East, even though BBRv2 should be superior to the previous version, where BBRv1 is able to achieve a higher congestion window size. On the other hand, BBRv2 works better in Asia, where it is able to achieve a more stable congestion window size compared to Cubic and BBRv1.

If this topic interests you, the references below offer useful context. I’m also happy to discuss QUIC and dynamic content delivery. Just reach out to me.

Performance Engineer

- Bruce M. Maggs and Ramesh K. Sitaraman. 2015. Algorithmic Nuggets in Content Delivery. SIGCOMM Comput. Commun. Rev. 45, 3 (July 2015), 52–66. https://doi.org/10.1145/2805789.2805800

- QUIC Working Group. https://quicwg.org/

- Google Quiche. https://github.com/google/quiche/

- Quiche Connection Stats. https://github.com/google/quiche/blob/7e46599a107e58d3cd289e7505ca4644f18c1423/quiche/quic/core/quic_connection_stats.h

- Jana Iyengar and Martin Thomson. 2021. QUIC: A UDP-Based Multiplexed and Secure Transport. RFC 9000. https://doi.org/10.17487/RFC9000

- Jana Iyengar and Ian Swett. 2021. QUIC Loss Detection and Congestion Control. RFC 9002. https://doi.org/10.17487/RFC9002

- Neal Cardwell, Yuchung Cheng, C. Stephen Gunn, Soheil Hassas Yeganeh, and Van Jacobson. 2017. BBR: congestion-based congestion control. Commun. ACM 60, 2 (Jan. 2017), 58–66. https://doi.org/10.1145/3009824

- Mike Bishop. 2022. HTTP/3. RFC 9114. https://doi.org/10.17487/RFC9114